Table of content

- Managing Director's Corner

- NRIS: Strengthening research with distributed expertise

- Investing in the future of data-driven research and AI

- Procuring Norway's most powerful supercomputer ever

- Real-world impact: Researchers solving societal challenges

- Driving innovation in industry and public administration with HPC

- Services development and training Initiatives

- Key collaborations nationally and across Europe

- How we work: sustainability, organisation, and governance

Welcome to our Annual Report for 2024

Sigma2 collaborates with the universities in Bergen, Oslo, Tromsø, and NTNU to provide the Norwegian research infrastructure services (NRIS). In this report, you will find highlights, key metrics, and insights from our joint efforts throughout 2024.

We are especially proud to showcase some of the groundbreaking research projects that have utilised our national services over the past year. You also get to read about new strategic initiatives, technological advancements, and updates on the many collaborations we participate in.

We hope you find our annual report informative and engaging.

Happy reading!

1. Managing Director's corner

2024 has been a year of significant progress for Norway’s e-infrastructure, marked by major investments, key technological advancements, and strengthened international collaborations.

This report highlights the milestones we have achieved and the steps we are taking to ensure Norwegian researchers have access to world-class computational resources. Below, we outline some of the most important developments from the past year.

The Research Council assessed the national demand for computing power, revealing a growing need across various sectors.

Their assessment led to a recommendation to invest 3.4 billion NOK to meet Norway's computing power needs in the coming years. The work to determine the preferred solution is underway and will continue into 2025, with the Sigma2 administration deeply involved.

The procurement process for Olivia, Norway’s next supercomputer, is well underway marking a significant step forward in enhancing Norwegian researchers’ computational capabilities.

Artificial intelligence continues to drive increasing demand for advanced computing resources in research and industry. As Norway prepares for future investments in e-infrastructure, ensuring AI-driven research has the necessary computational capacity, will be a key priority. Why the name Olivia, you may wonder? Read on, and you will discover an excellent reason!

The NIRD Data Lake expansion added substantial storage capacity, improving data accessibility. Additionally, the new NIRD Research Data Archive, which is in its final phase now, will further streamline data management for the research community. We are looking forward to the launch.

As part of our societal responsibility, we ensure that national e-infrastructure resources are allocated to the most eligible research projects. Our Resource Allocation Committee (RFK), composed of leading Norwegian scientists, plays a crucial role in this process.

In 2024, the committee conducted two national calls, evaluating applications based on scientific excellence. I extend my sincere gratitude to the RFK for their dedication and expertise in supporting groundbreaking research.

Once again, we have conducted our annual user survey, and we are very pleased with the feedback we received from the users of our services. Overall, we keep receiving high scores and use your feedback and suggestions for continuous service improvements and development.

The unfolding global situation is complex, with war, unrest, and a changing world order. It has rarely been more evident how important it is for a small country like Norway to maintain close relationships with other countries to secure recourses through cooperation in trade, defense, and technology.

We participate in several international collaborations to enhance computational science infrastructure and services in Norway. In this report, you can read about new and established collaborations.

We hope you find our annual report for 2024 insightful.

Gunnar Bøe

Managing Director, Sigma2

2. NRIS: Strengthening research with distributed expertise

The Norwegian Research Infrastructure Services (NRIS) is a collaboration between Sigma2 and the University of Bergen, the University of Oslo, UiT The Arctic University of Norway, and NTNU. This collaboration aims to deliver national supercomputing and data storage services. The services are operated by NRIS and coordinated by Sigma2.

In this interview, Alexander Oltu, newly appointed chair of the NRIS leadership meeting, from UiB, and Stein Inge Knarbakk from Sigma2 discuss NRIS' important role in supporting Norwegian researchers with e-infrastructure services. While looking back at 2024 they also cast a glance into the crystal ball for what may come.

As a distributed organisation, NRIS ensures close collaboration with research communities, enabling tailored support and efficient resource allocation. This structure provides access to broad expertise and strengthens Norway’s position in high-performance computing (HPC) and AI.

Oltu highlights how technological advancements drive the need for more powerful and flexible solutions. While supercomputing was traditionally used in academia, the increasing demand from public administration signals a shift in NRIS’s role. He notes that the Research Council of Norway is considering giving Sigma2 a broader mandate to provide HPC services beyond academia, which could represent a great change to our organisation.

Collaboration remains central to NRIS, both internally and externally. Through strong researcher engagement and a tailored support model, NRIS continues to deliver top-tier computing resources, positioning Norway for future challenges in HPC, AI, and data storage.

3. Investing in the future of data-driven research and AI

A milestone year for computing power

In recent years, the enormous development and availability of generative artificial intelligence (AI) have contributed to a massive increase in the demand for high-performance computing (HPC). 2024 was the year when the rising demand for computing power made it onto the national agenda.

The development of language models, in particular, has been in the spotlight, as it requires vast amounts of data and computing power to create accurate and effective models. Additionally, the need for computing power is increasing not only in traditionally data-intensive fields such as climate, oceanography, and health but also in the social sciences and humanities. More and more disciplines require computing power to handle complex analyses, simulations, and large datasets, driving innovation and efficiency across sectors.

A strategic Government mandate to assess Norway's computing needs

Therefore, it was good news for the national e-infrastructure when the Research Council of Noway received a mandate from the Ministry of Education and Research in April 2024 to assess the need for computing power for research and AI. The task involved mapping the scope and composition of nationally available resources today and the expected development over a five-year perspective.

The report, published in August, confirmed a significant and rapidly growing need for computing power in research, public administration, and industry. The Research Council highlighted that computing capacity, infrastructure, and support services for data management are crucial for leveraging technological opportunities and the extensive amount of data available.

Also, comparable countries invest significantly in national high-performance computing resources and actively participate in the European high-performance computing collaboration, EuroHPC Joint Undertaking, which you can read more about under the section "Key collaborations nationally and across Europe" in this annual report.

Investment recommendations

Based on their report, the Research Council initially recommended investing at least 2.6 billion NOK in HPC resources over the next five years. An investment of this magnitude requires a concept evaluation study to assess the need for computing power and how a national infrastructure for computing power for research, administration, and AI should be organised.

While this work went on in the background, additional and more immediate good news was delivered to Sigma2— in person by no less than two ministers.

We were awarded 192.8 million NOK from RCN's INFRASTRUCTURE programme and another 40 million to fund the development of Norwegian language models. The first grant will co-finance a new supercomputer to replace Betzy, the latter will support the Mìmir language model project where the National Library, NTNU, UiO and Sigma2 share a unique collaboration.

The Ministers of Research and Higher Education and Digitalisation and Public Administration brought good news to Norwegian researchers.

The concept evaluation study began in late autumn 2024, with broad involvement and participation from Sigma2, the BOTT universities, and other stakeholders, aiming to find the best alternative for further development.

Looking ahead: Major changes on the horizon

The concept assessment report, published in January 2025, confirmed a rapidly growing need for supercomputing in research, public administration, and industry. The recommended investment is now 3.4 billion NOK, 800 million more than previously estimated.

The report advocates for a single provider, Sigma2, to manage supercomputing needs across sectors. This approach will yield significant economic benefits, enhance security, efficiency, and decision-making support, and promote cross-sector collaboration.

This excites us for what's to come, and we are invested in contributing to the Research Council's further work.

4. Procuring Norway's most powerful supercomputer ever

Looking to the near future of high-performance computing (HPC) and addressing the immediate need for researchers, one significant event last year was completing the procurement process of what's to become Norway's next supercomputer — the most powerful yet. This milestone represents a major step in our commitment to advancing computational capabilities and supporting cutting-edge research.

A key role in data-driven research and AI

In the spring of 2024, HPE Hewlett-Packard Norge AS (HPE) won the competition and was awarded the 225 million NOK contract. HPE will deliver a Cray Supercomputing EX system with 304 of the most advanced GPUs currently available. These GPUs offer significant computational power and are compatible with software and programming languages used in data-driven research.

Norway's most powerful supercomputer is coming soon!

Olivia is expected to play a key role in developing artificial intelligence (AI) and advancing and improving Norwegian language models. With immense technological superpower, Olivia will also facilitate research in scientific disciplines like health, marine, and climate research.

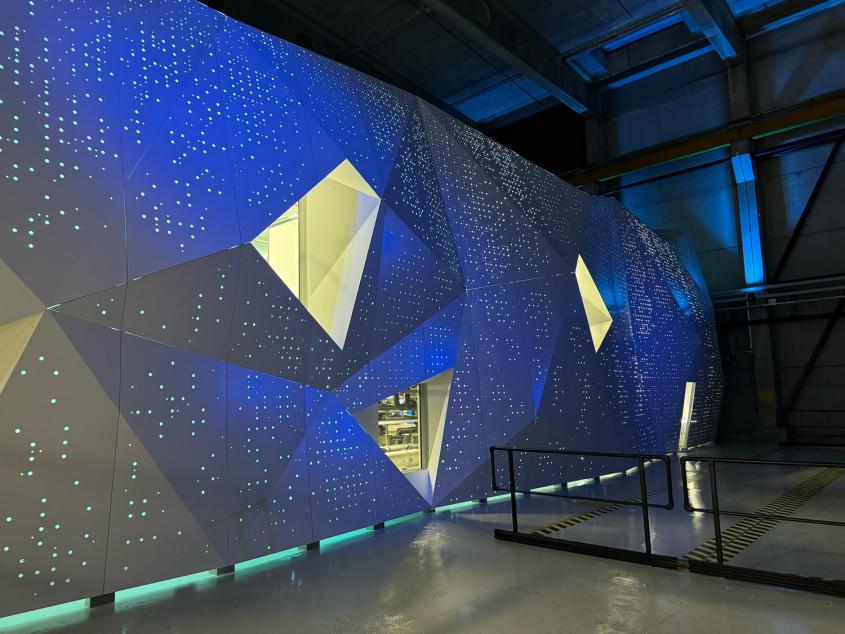

In August, we organised a naming competition where all members of NRIS were invited to vote on the name of the new supercomputer. The name Olivia, which refers to the mineral Olivine that was previously extracted from Lefdal Mine — now converted into Lefdal Mine Data Centers (LMD) where Olivia will be housed — received the most votes.

Powerful, efficient, and built for the future

Olivia will represent an impressive concentration of computing power per cubic centimetre, with provisions for future expansion and upgrades. She will combine exceptional performance with energy efficiency, reducing her carbon footprint by 30% compared to Betzy, Norway's most powerful supercomputer since 2021.

Olivia becomes the name of Norway's next supercomputer

Our Preparations for Operation Working Group (POWG), consisting of NRIS experts, has worked tirelessly to ensure Olivia meets the needs of Norwegian scientists when it launches in 2025.

POWG has focused on understanding researchers' needs and developing services. In October, they gained early access to the highly anticipated NVIDIA GH200 GPUs to familiarise themselves with Olivia’s system. Initial testing was promising, and the team will continue working to ensure a seamless experience for Norwegian scientists from day one.

We regularly share updates on LinkedIn about POWG’s efforts to prepare Olivia, so those interested are welcome to connect with us.

Olivia will be a sustainable tool that supports Norway's ambitions for advancing green technology. We present more of our work on sustainability below.

Additionally, we’ve launched a dedicated landing page for Olivia with everything you need to know about Norway’s most powerful supercomputer.

Olivia will be installed in LMD during the spring of 2025 and open to researchers in the autumn.

5. Real-world impact: Researchers solving societal challenges

Researchers across diverse fields—from AI and language models to power production planning, weather forecasting, and climate research—use our various services to address critical societal challenges.

We are happy to share eight new use cases highlighting how our services support groundbreaking research to enhance energy efficiency, advance climate modeling and drive scientific innovation.

If you want to discover use cases beyond the eight new ones presented in this report, you may use the filters below to explore more.

The researchers using the national services

The rapid development of technology and digital tools continues to drive the growing need for HPC and storage services across scientific disciplines.

In 2024, national systems supported nearly 700 projects and almost 2,400 users. The projects were allocated 1.488 billion CPU-hours and 51.3 petabytes of storage capacity in total.

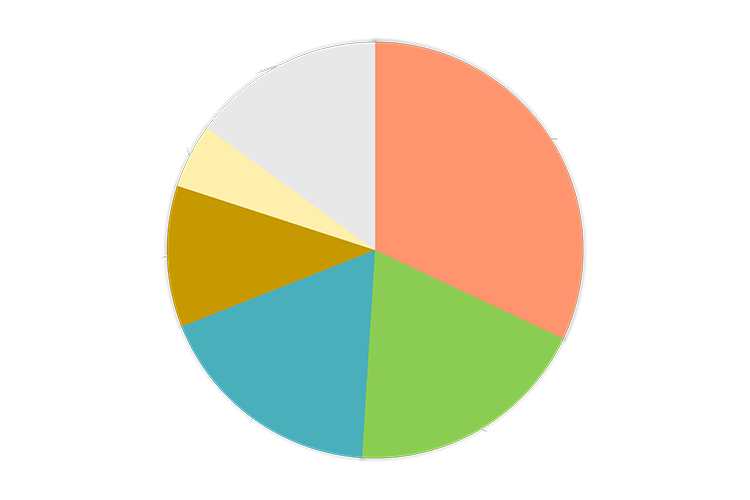

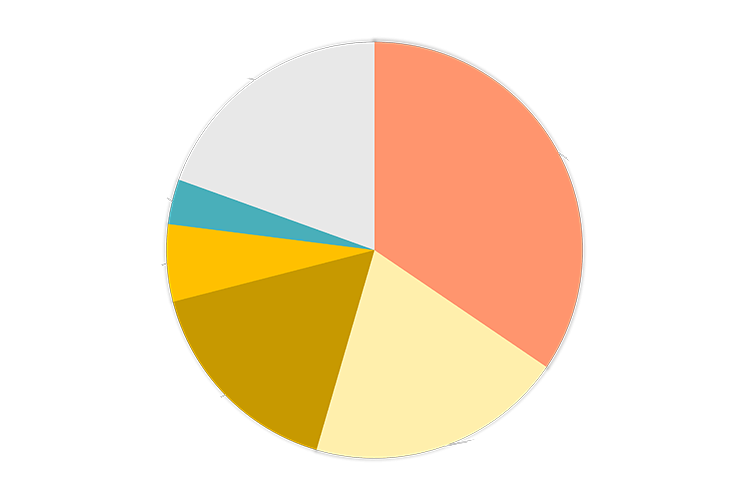

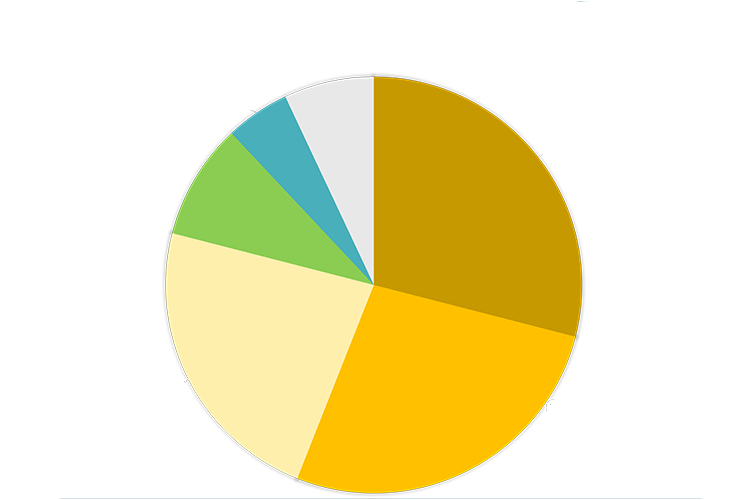

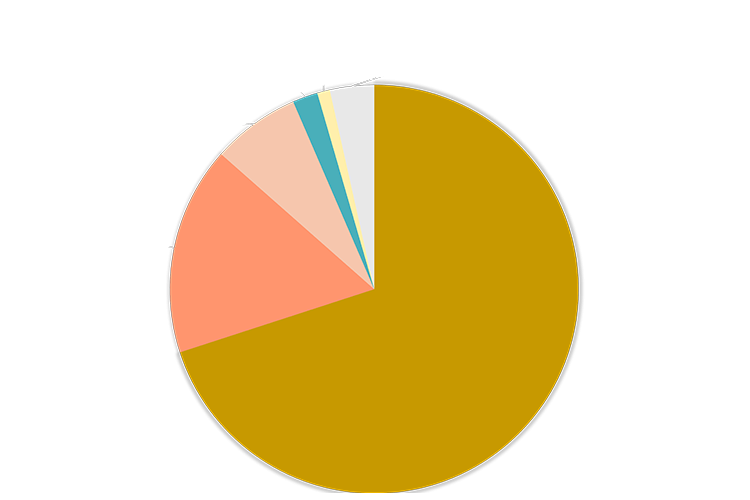

Top usage in 2024 by institution and fields of science

Top usage by institutions, in terms of allocated HPC resources, is distributed roughly the same as last year. For storage, we see a large increase for UiO and a decrease for NORCE.

Like last year, geosciences remain the largest consumer of computing and storage resources.

In terms of storage, geosciences—driven by the climate research community—have seen a tremendous increase, further solidifying their position as the largest consumer, while biosciences have experienced a decline. Below are diagrams illustrating the primary users, categorised by scientific field.

Top 5 HPC projects

Some of the many research projects we support stand out due to their significant computing needs.

This section highlights the top five HPC projects by resource consumption, demonstrating the impact of advanced computing on scientific discovery.

Click on each title below to read more about the project and see how many CPU-hours it consumed.

1. Solar Atmospheric Modelling

The Sun's impact on Earth is crucial, affecting human health, technology, and critical infrastructures. Solar magnetism is central to understanding the Sun's magnetic field, activity cycle, and environmental effects.

Mats Carlsson and his fellow researchers are at the forefront of modelling the Sun's atmosphere with the Bifrost code. This aids comprehension of the Sun's outer magnetic atmosphere, influencing phenomena like solar wind, satellites, and climate patterns.

- Project Leader: Mats Carlsson

- Institution: Rosseland Centre for Solar Physics (ROCS), UiO

- Consumed CPU-hours: 238,4 million

2. Combustion of hydrogen blends in gas turbines at reheat conditions

Research into Carbon Capture and Storage (CCS) for fossil fuel-based power generation is crucial in mitigating climate change. With modern gas turbines (GTs) finely tuned for conventional fuel mixtures, CCS introduces challenges due to variations in fuel and operating conditions.

To address this, high-resolution computational fluid dynamics is used to study hydrogen-blend combustion in gas turbines, aiming to understand thermo-acoustic behaviour in staged combustion chambers. This research uses Sandia NL's S3D code and supercomputing resources to ensure stable and safe gas turbine operation, contributing to climate change mitigation efforts.

- Project leader: Andrea Gruber

- Institution: SINTEF Energi AS

- Consumed CPU-hours: 189,6 million

3. Heat and Mass Transfer in Turbulent Suspensions

This project focuses on performing detailed simulations of heat and mass transfer in two-phase systems, involving dilute suspensions of particles or bubbles in gas or liquid flows. These systems are common in both natural and technological settings.

Building upon advancements in flow simulation algorithms, particularly in the areas of turbulent heat transfer and droplet evaporation, the project seeks to explore the added complexity introduced by phase-change thermodynamics. This complexity includes variable droplet size and phenomena like film boiling, marking a significant advancement from previous studies.

- Project Leader: Luca Brandt

- Institution: Department of Energy and Process Engineering, NTNU

- Consumed CPU-hours: 93,3 million

4. Bjerknes Climate Prediction Unit

The Bjerknes Climate Prediction Unit focuses on developing prediction models to address climate and weather-related challenges. These include predicting precipitation for hydroelectric power, sea surface temperatures for fisheries, and sea-ice conditions for the shipping industry.

Bridging the gap between short-term weather forecasts and long-term climate projections, their goal is to create a highly accurate prediction system for northern climate. This involves understanding climate variability, developing data assimilation methods, and exploring the limits of predictability.

Project Leader: Noel Sebastian Keenlyside

Institution: Geophysical Institute, UiB

Consumed CPU-hours: 87,6 million.

5. Extreme Planetary Interiors

The Water and Hydrogen Dynamics Project focuses on understanding the role of water and hydrogen in the Earth's mantle. By investigating diffusion in key silicate minerals, this project aims to determine how these elements contribute to plate tectonics and mantle dynamics.

They use ab initio molecular dynamics simulations to study diffusion rates and paths in minerals like olivine and pyroxenes. Findings will help interpret experimental data and geophysical observations, enhancing our knowledge of Earth's internal processes.

- Project Leader: Razvan Caracas

- Institution: Department of Geosciences, UiO

- Consumed CPU-hours: 44,5 millioner

Top 5 storage projects

As research becomes increasingly data-intensive, efficient storage is essential for handling vast amounts of information.

This section highlights the top five projects with the highest data storage consumption, showing how researchers utilise large-scale storage to drive scientific progress.

Cick on each title below to read more about the project and see how much storage space it consumed.

1. High-Performance Language Technologies

This timely project addresses the rapid advancements in Natural Language Processing (NLP) and its strong presence in Norwegian universities.

It focuses on countering the dominance of major American and Chinese tech companies in training Very Large Language Models (VLLMs) and Machine Translation (MT) systems, which have wide applications.

To foster diversity, the project aims to lower entry barriers by establishing a European language data space, facilitating data gathering, computation, and reproducibility for universities and industries to develop free language and translation models across European languages and beyond.

- Project Leader: Stephan Oepen

- Institution: Department of Informatics, UiO

- Consumed storage space: 3099,5 TB

2. Storage for Nationally Coordinated NorESM Experiements

The Norwegian climate research community has developed and maintained the Norwegian Earth System Model (NorESM) since 2007. NorESM has been instrumental in international climate assessments, including the 5th IPCC report through CMIP5.

The consortium, comprising multiple research institutions, is dedicated to contributing to the next phase, CMIP6, in partnership with global modelling centres. This collaborative effort seeks to advance our knowledge of climate change and provide accessible data to scientists, policymakers and the public.

- Project Leader: Mats Bentssen

- Institution: NORCE Norwegian Research Centre AS

- Consumed storage space: 1951,5 TB

3. Bjerknes Climate Prediction Unit

The Bjerknes Climate Prediction Unit focuses on developing advanced prediction models to address climate and weather-related challenges, such as predicting precipitation for hydroelectric power, sea surface temperatures for fisheries, and sea-ice conditions for shipping.

They aim to bridge the gap between short-term weather forecasts and long-term climate projections by assimilating real-world weather data into climate models. This project faces three key challenges: understanding predictability mechanisms, developing data assimilation methods, and assessing climate predictability limits.

- Project Leader: Noel Sebastian Keenlyside

- Institution: Geophysical Institute, UiB

- Consumed storage space: 1589 TB

4. Storage for INES—Infrastructure for Norwegian Earth System Modelling

The INES project, led by Norwegian climate research institutions, maintains and enhances the Norwegian Earth System Model (NorESM). Its goals include providing a cutting-edge Earth System Model, efficient simulation infrastructure, and compatibility with international climate data standards.

The Norwegian Climate Modeling Consortium plans to increase contributions for further development and alignment with research objectives.

Project Leader: Terje Koren Berntsen

Institution: Department of Geosciences, UiO

Consumed storage space: 1306 TB

5. High-Latitude Coastal Circulation Modelling

This project, managed by Akvaplan-niva, encompasses physical oceanographic modelling activities, covering coastal ocean circulation modelling in the Arctic, Antarctic, and along the Norwegian coast.

These activities involve storing significant amounts of data, including high-resolution coastal models and atmospheric model output needed to force the ocean circulation models. To achieve high resolution in various regions, we must store substantial model data and results from specific simulations.

Project Leader: Magnus Drivdal

Institution: Akvaplan-Niva AS

Consumed storage space: 646,5 TB

6. Driving innovation in industry and public administration with HPC

The National Competence Centre for HPC (NCC) supports the industry, public, and academic sectors by providing access to the competencies needed to leverage technologies such as High-Performance Computing (HPC), Artificial Intelligence (AI), Machine Learning (ML), and related technologies.

Sigma2, SINTEF, and NORCE make up the NCC. The centre collaborates with the National EDIHs Oceanopolis and Nemoroor, as well as the DEP Norway members Digdir and Innovation Norway.

Tapping into supercomputer LUMI's power for smarter farming

DigiFarm revolutionises agriculture with Artificial Intelligence

In 2024, the NCC was involved in several notable projects. One such project was with the agri-tech company DigiFarm, which already had been utilising national capacity for its operations. In 2024, most of DigiFarm’s training workload was migrated to the pan-European supercomputer LUMI, of which Norway owns a part through Sigma2.

Accessing LUMI’s extreme capacity allowed DigiFarm to increase its training datasets to 40 million acres of manually delineated Sentinel-2 data. This change boosted their IoU (accuracy) from 0.89 to 0.94, expanded the number of detected classes (including deforestation), and reduced their time to market by as much as six months(!).

In November 2024, DigiFarm was nominated for three prestigious awards at the Supercomputing 2024 conference in Atlanta, of which they won two.

Since then, DigiFarm has further improved its IoU to 0.94 and continues to benefit from utilising the power of LUMI. DigiFarm was also granted a substantial allocation via the EuroHPC JU part of the machine, where the NCC assisted them with the application to get resources from their joint European pool thus bringing home Norwegian investments in the Digital Europe programme.

Collaborations and innovations in AI and real-time Computing

In 2023, the NCC collaborated with Norwegian Customs to implement entity resolution for automatic data cleaning. The results were presented at the Nordic Industry Conference in Copenhagen, jointly hosted by the Nordic Competence Centres. Norwegian Customs has since expressed interest in further work on the national systems for training transformer models.

While NCC has onboarded new institute users like the Institute for Energy Technology (IFE), focusing on traditional HPC, they have also seen growing interest from industrial users in AI-focused applications. These include training large language models (LLMs) on peer-reviewed scientific papers and developing models for audio signature detection to aid noise cancellation on edge devices.

A new trend is the interest in real-time computing, where data is sent from edge sensors to central systems for processing. An example is the Submerse project, where interrogators detect the presence near submerged optical cables. The goal is to perform signature detection on edge devices, but initially, the data must be sent centrally for model training.

Accelerating power planning with HPC

HPC is shaping the future of energy planning.

In today’s fast-paced and competitive energy market, accurate and timely power production planning is crucial for success. In 2024, SINTEF Energy applied High-Performance Computing (HPC) to transform how power generation, transmission and trading are planned. The NCC collaborated with SINTEF Energy on a proof-of-concept to restructure simulation systems—reducing simulation time from two hours to under two minutes (!).

This breakthrough enables energy market players to run more simulations with greater detail within critical time frames, leading to better decision-making.

Energy is a hot topic today, and we’re happy to see HPC-driven innovation playing a key role in ensuring a stable and optimised power supply for the future.

7. Services development and training Initiatives

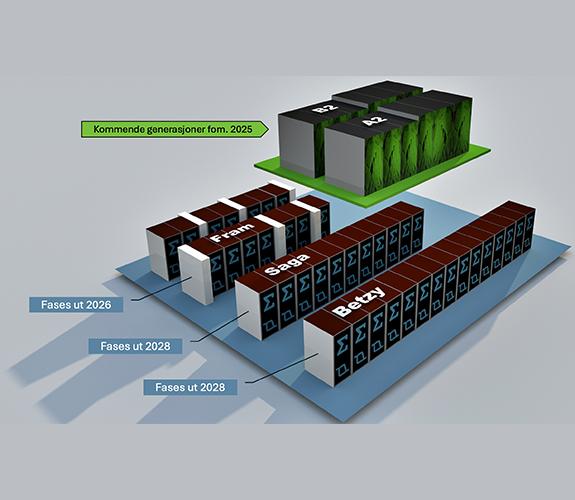

High-Performance Computing (HPC)

2024 was another year of continued operation for our HPC service. Although no new systems were set in operation, the work-horses Betzy, Fram and Saga satisfied the demand for computing resources. In addition, there was a considerable increase in demand for the LUMI GPUs, up more than 230% from last year.

Finalising the procurement process of Fram’s successor, now known as the new Olivia system, was by far the most important event on the HPC side. Thanks to the vendor competition in the final round, we received an excellent offer for Olivia. Not only will it be able to take over the CPU workload from Fram, but the new GPUs will give us an important boost in GPU computing capacity.

Interestingly enough, even though Saga’s new A100 GPUs are in service, there is still demand for the old P100 GPUs.

Since being put into production in 2017, keeping Fram in service, particularly the cooling system, has been an increasing challenge. Although the system has maintained popularity, we look forward to decommissioning it after Olivia is set in production in 2025.

Last year, Betzy was the final system to successfully undergo a major operating system upgrade to Rocky 9, which has also been installed on Fram and Saga. Considerable downtime is needed for a major upgrade, and finally, seeing all systems running in production with the very same OS is a major milestone.

Also, Open OnDemand was installed and equipped with a minimum set of applications for users preferring to work with graphical interfaces rather than the command line.

Course Resources as a Service (CRaaS)

Course Resources as a Service (CRaaS) assists researchers in facilitating courses or workshops with easy access to national e-infrastructure resources, used for educational purposes.

Projects utilising CRaaS during 2024 have spanned the fields of genomics, physics, generative AI, GPU programming and computational training on our supercomputers.

Nearly 140 users have accessed the service during 2024.

NIRD Data Peak and NIRD Data Lake

In 2024, NIRD (National Infrastructure for Research Data) underwent its second expansion, increasing its total capacity to 62 petabytes (PB) across two physically separated systems in Lefdal Mine Data Centers.

The two systems, designated as NIRD Data Peak and NIRD Data Lake, are tightly connected, but physically separated, and possess distinct characteristics to cater to a diverse range of use cases. Commencing with the first allocation period in 2024, the array of functionalities provided by Data Peak and Data Lake resources were consolidated and presented as two distinct services.

NIRD Data Peak

NIRD Data Peak is a high-performance storage service tailored for scientific projects and investigations. It accelerates data-driven discoveries by supporting high-performance computing, accommodating AI workloads and optimising intensive I/O operations cost-efficiently.

Users of the Data Peak service can request an on-demand backup service, NIRD Backup, for valuable datasets that necessitate a secondary copy.

NIRD Data Lake

NIRD Data Lake is a cloud-compatible storage service designed for sharing and storing data throughout and beyond the lifecycle of a project. Offering a unified file and object storage model, the Data Lake service strikes an optimal balance between performance, functionalities, and cost.

In 2024, NIRD Date Lake was expanded by 13 petabytes (PB), with a total capacity of 38 petabytes to meet the growing demand for a storage solution capable of handling structured and unstructured data, irrespective of data volume.

Sigma2 was the first organisation in Norway to pilot high-performance object storage on the IBM Storage Scale. After extensive testing of the tech-preview solution by a select group of pilot projects, the solution was upgraded and scaled out on the NIRD Data Lake in August 2024 to provide highly available, high-performance object storage. This enhancement ensures increased performance and improved reliability and scalability for cloud computing and demanding AI workloads.

Due to various factors, including the provided functionalities like object storage (S3 access), simplicity, available storage capacity, and price incentives - with a new contribution model with distinct pricing for the Data Peak and Data Lake services launched from 2024.2 allocation period - has resulted in a significant increase in storage utilisation for the Data Lake service. Specifically, the Data Lake experienced a remarkable 116% surge in storage utilisation during 2024.

NIRD Research Data Archive

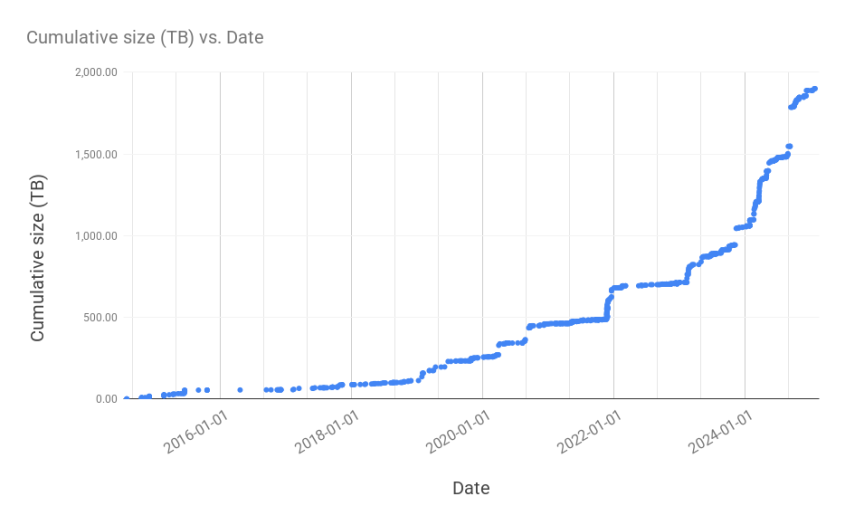

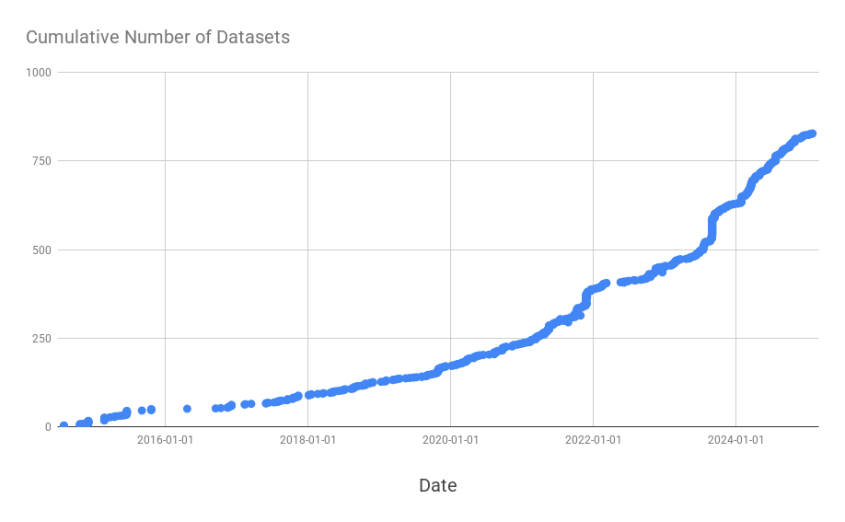

In 2024, 194 new datasets were deposited and published in the NIRD Research Data Archive (NIRD RDA), increasing the volume of the archived data by more than 837 TB in one year.

The increasing FAIR and data management awareness of researchers has resulted in an increment in the number of datasets deposited during the last years (see Figure 1 and Figure 2 below).

The research data landscape is undergoing rapid transformation, necessitating a corresponding evolution of archiving and publishing practices. The Open Science paradigm and the FAIR principle have catalysed the widespread accessibility of datasets through open publication. Moreover, emerging technologies and methodologies, such as artificial intelligence and machine learning disrupt conventional paradigms.

In 2021, Sigma2 initiated a project to design, procure, and implement the next-generation NIRD Research Data Archive under the principles of user-friendliness, FAIR, and interoperability by design. In 2023, developing the next-generation NIRD RDA commenced, with an anticipated launch in Q1 2025. The new archive solution will provide a state-of-the-art, AI-enabled, and FAIR-compliant archive, fully equipped to meet their future research requirements.

NIRD Service Platform

With the growing volume of research data and the increasing availability of technology possibilities, the NIRD Service Platform has become indispensable for numerous research environments. Researchers can now initiate services and consume data directly from the NIRD, eliminating the need to transfer data elsewhere. Data can be exposed through community-specific portals and standard web services.

The NIRD Service Platform’s hybrid CPU/GPU architecture is optimised to execute data-intensive computing workflows, including pre-/post-processing, visualisation, and AI/ML analysis, without any queuing delays. A couple of notable highlights to mention are the CO2 Data Share portal, and a Norwegian LLM-based (large language model) chat pilot service running on top of the NIRD Service Platform.

In 2024, we observed a 24% surge in resource requirements for services launched on the NIRD Service Platform by end-users. The average allocation was 1,311 virtual CPUs (vCPUs), while the maximum allocation reached 1,462 vCPUs.

NIRD Toolkit

NIRD Toolkit is a Software as a Service (SaaS) solution that enables the on-demand creation of software applications on the NIRD Service Platform, eliminating the need for cumbersome IT operations. The NIRD Toolkit facilitates the execution of computations on Spark, R-Studio, and numerous widely used artificial intelligence algorithms with a single click.

No substantial visible updates have been made to the NIRD Toolkit over the past year. Nevertheless, the on-demand software has undergone regular updates to ensure its continued relevance and functionality.

Sensitive Data Services (TSD)

Sensitive Data Services (TSD) is a service delivered by the University of Oslo and provided as a national service by Sigma2.

TSD offers a remote desktop solution for secure storage and high-performance computing of sensitive personal data. It is designed and set up to comply with Norwegian regulations regarding individuals’ privacy. In 2024, TSD continued to provide a robust and secure solution for handling sensitive personal data. This service is central to Norway’s commitment to ensuring data privacy and security in research environments.

TSD remains integral to the national e-infrastructure, supporting Norwegian research since 2018. In 2024, TSD provided access to 1,600 CPU cores and 1,370 TB of storage, including general and HPC-specific storage solutions. The platform also supported demanding workloads with GPUs and high-memory nodes, essential for complex statistical analyses, such as those required in personalised medicine. TSD supported a growing user base, with 1,955 active projects involving 11,953 users and 8,237 individual users. The service continues to be a crucial tool for the research community, enabling efficient handling and processing of sensitive data.

TSD’s Plans for 2025

In 2025, TSD plans to implement improvements, including procuring an additional computational Cluster, Colossus 3.0, for high-demand tasks. Additionally, the TSD platform will integrate more robust user management systems, including superuser tracking and advanced monitoring for resource usage.

The next phase will also involve further optimising the DevOps processes, focusing on scaling its test environment and rolling out production features. Key tasks will include expanding the system’s capabilities with enhanced APIs, supporting the transition to OKD environments, and ensuring that all developments align with the needs of its user base.

Advanced User Support (AUS)

Advanced User Support (AUS) is when we benefit from letting the experts within NRIS spend dedicated time going deeper into the researchers’ challenges. The effects are often of high impact, and the results are astonishing.

In 2024, the AUS activities included some interesting collaborative projects covering advanced usage across nearly all our infrastructure and services, from TSD (sensitive data) to NIRD.

As technologies and research methods advance, so does the advanced user support. In 2024, we restructured how researchers can obtain advanced support and established the Extended User Support (EUS) mechanism, supported by a new High-Level Support Team (HLST) with some of the savviest experts in NRIS. We encourage all projects using our infrastructure services to consider if this could be useful.

We also want to highlight the AUS User Liaison collaborations. This framework allows experts to have a foot inside NRIS activities while maintaining a close tie to specific application communities, major research infrastructures and centres of excellence.

EasyDMP

Data planning is essential to all researchers who work with digital scientific data. The easyDMP tool is designed to facilitate the data management process from the initial planning phase to storing, analysing, archiving, and finally sharing the research data at the end of the project. Presently, easyDMP features 1644 users and 1742 plans from 263 organisations, departments or institutions.

Although creating a plan is a crucial aspect of data management, it is not the sole challenge. Plans require regular review, sharing, execution, and other processes. The actionability of data management rules has become increasingly important in data-driven science, particularly in the era of artificial intelligence. In 2024, we continued our internal discussions regarding the functionalities of data management tools that would best meet the needs of researchers, providers, and funders.

User Support

Basic user support operates a national helpdesk that handles user requests, including access, system usage, and software issues. Support tickets are managed by highly competent technical staff from NRIS.

Our Annual User Survey, conducted to gather feedback and enhance support services, consistently shows high user satisfaction. The results indicate that respondents remain very satisfied with the support provided for Project Managers and regular users.

We will continue our efforts to maintain this high standard and further improve our services.

Maximising efficiency with NRIS Training

Having access to comprehensive and relevant training is crucial for users of national e-infrastructure services. These courses ensure users can fully leverage available resources, optimising their research and development processes.

Enhancing User Proficiency

In 2024, our NRIS Training Team has been busy organising courses and activities to help researchers utilise the Norwegian research infrastructure services.

The team keeps expanding its training portfolio. Notably, in 2024, sessions increased substantially compared to previous years. We recorded 265 registrants for training events organised by NRIS Training. The team also collaborates on providing training activities with external partners such as CodeRefinery, Carpentries, ENCCS, and Aalto University, thereby substantially extending the reach and impact.

Tailored training through user feedback

The courses typically include a mix of talks, practical hands-on instructions, and problem-solving exercises delivered as in-person events or as online courses with remote participation.

Input from our Annual User Survey and shorter surveys conducted after training sessions is vital when customising training sessions concerning the most requested topics. This has resulted in the provision of self-paced lesson materials, concise training videos, and a mix of basic, intermediate, advanced, and highly advanced training.

In December 2024, the NRIS Training Team met to evaluate the past year’s training activities and plan for the future.

Of course, onboarding the new supercomputer Olivia will become a focused task for the team during autumn 2025.

The team will further develop the collaboration between NRIS Training and efforts in the NAIC project (more about this project below), where we anticipate a demand from AI factory users.

Courses offered by NRIS Training are recorded and published on our YouTube channel.

8. Key collaborations nationally and across Europe

Strengthening the AI infrastructure with the Norwegian AI Cloud (NAIC)

The Norwegian Artificial Intelligence Cloud (NAIC) is a five-year project, initiated in 2021 with three years of funding from the Research Council of Norway. NAIC aims to strengthen Norway’s AI and machine learning infrastructure to support education, research, and innovation.

NAIC focuses on optimising AI infrastructure, enabling users to leverage resources more effectively. The NAIC Orchestrator simplifies virtual machine provisioning across cloud platforms, ensuring a consistent user experience. Additionally, the NAIC Jobanalyzer tool has grown in capability, offering detailed resource usage reports and job performance analysis, which will pave the way for optimising infrastructure usage and decision-making based on real-time data.

In 2024, NAIC introduced key infrastructure upgrades, including enhanced benchmarking, storage solutions, and GPU investments to support AI and HPC research better. One of the key developments was the advancement in Benchmarking and Real-Time Profiling for Informed Decision-Making. This initiative provides users with actionable insights into resource utilisation, helping them make informed decisions about job configurations and resource allocation.

Building a strong AI community

The NAIC User Forum fosters knowledge-sharing and user collaboration, enhancing transparency and engagement.

A notable addition in 2024 was the introduction of the NAIC Storage Cache, developed in collaboration with Sigma2, UiT, and the Service Platform Team.

This storage solution will utilise IBM's AFM caching technology, enhancing data accessibility and download speeds for Large Language Models (LLMs) and datasets stored in the NIRD data lake. This system improves efficiency by optimising local resources and streamlining access to large datasets for AI and machine learning.

NAIC has focused on its commitment to training and dissemination and has organised several workshops and courses throughout 2024, including sessions on performance analysis and optimisation in LUMI, accelerating genomics workflows on GPUs, and using HPC resources in advanced AI topics. NAIC also contributed to significant conferences like the Northern Lights Deep Learning Conference.

Photos from NAIC's website.

As part of the infrastructure development for 2024, a considerable portion of the budget was allocated to procuring GPUs for the Norwegian Research and Education Cloud (NREC), enhancing the computational capacity of the platform for all partners. The allocation and investment in infrastructure were designed to ensure that NAIC’s resources are scalable and aligned with the growing demand from AI researchers.

In 2025, NAIC will further enhance its infrastructure and expand support for AI workflows.

Seven AI Factories to advance Europe's AI leadership

In December, we announced our partnership with LUMI-AI, the successor of the LUMI supercomputer and the new pan-European supercomputer and AI Factory. Finland will lead the new LUMI AI Factory consortium, which includes the Czech Republic, Denmark, Estonia, Norway, and Poland.

LUMI-AI will be one of seven factories selected by the JU to combine AI and supercomputing to drive European AI leadership to accelerate research and innovation.

Through Sigma2, Norway will invest 215 million NOK in the LUMI-AI hardware to ensure Norwegian researchers have sufficient resources to build upon their success in participating in the current LUMI supercomputer and support the Norwegian government's digitalisation strategy.

The Norwegian Research Council will fund an additional 30 million NOK to establish a national part of the AI Factory.

Establishing a National AI and HPC Ecosystem

With the planned launch of the LUMI AI Factory project and national AI Factories, Sigma2 will play a key role in developing the Norwegian AI Factory. This national collaboration aims to integrate AI and HPC to support research, public administration, and industry.

AI-generated illustration.

As the Norwegian Research Council report advocates for a single provider to manage supercomputing needs across sectors, this initiative will help create a scalable and sustainable AI ecosystem, aligning with European efforts like the EuroHPC and the LUMI AI Factory.

Building the Norwegian AI Factory

The Norwegian AI Factory will be developed as a platform for AI-driven innovation, bringing together universities, research institutions, public administration, and industry.

Sigma2, in collaboration with key national stakeholders including UiO, UiB, UiT, NTNU, SINTEF, NORCE, Simula, and public sector entities like Digitaliseringsdirektoratet (DigDir) and the Research Council, will facilitate access to national and European AI computing resources.

Infrastructure and integration

As part of the broader effort to modernise Norway’s digital infrastructure, the AI Factory will integrate into an expanded Sigma2 framework:

- Federated data management: Establishing a centralised AI model repository integrated with NIRD storage.

- Hybrid AI-HPC workflows: Developing seamless interoperability between HPC clusters, cloud-native AI environments, and federated European infrastructures.

- AI-optimised HPC & cloud services: Enabling researchers and innovators to access AI-dedicated HPC resources through LUMI-AI and national cloud services.

Looking ahead

The next phase will ensure the continued growth and integration of AI and HPC in Norway. A key priority is the transition of Norwegian AI Cloud (NAIC) resources to national HPC services, securing long-term availability beyond 2026. Additionally, efforts will focus on operationalising OpenStack for AI workloads and improving cloud-HPC integration.

AI-driven public services will be further developed, leveraging AI for government applications and digital transformation. Strengthening industry participation is also a priority by supporting SMEs and enterprises in AI adoption. At the same time, international cooperation will expand, reinforcing Norway’s role in European AI and HPC collaborations.

LUMI: The old wolf is still howling

The LUMI pre-exascale system, where Norway owns a stake through Sigma2, has reached its final configuration. It is still the most powerful and significant European system breaking ground in AI projects and helping researchers achieve outstanding results.

LUMI has seen very high utilisation through 2024, and with the increased activity within the field of AI, queuing times have increased. Several Norwegian projects have success stories from using LUMI, with compute resources allocated from the national share or the EuroHPC JU’s system share. Two are highlighted in this annual report:

NRIS has participated actively in the LUMI Consortium, providing two experts to the LUMI User Support Team (LUST) and experts to the various interest groups, and Sigma2 was represented with a member of the Operational Management Board (OMB) in all of 2024.

With increased activity, the LUMI User Support Team and the two NRIS members dedicated to this team have been busy supporting users and organising training events.

Building on new and established collaborations

Building and maintaining strong international partnerships is important in global uncertainty to ensure that we stay at the forefront of expertise and access to new technology. This report highlights our involvement in international collaborations that strengthen Norway’s e-infrastructure services.

EuroHPC Joint Undertaking

The EuroHPC Joint Undertaking initiated important project calls and procurements during 2024, some directly or indirectly involving Sigma2 and NRIS. The most significant was the call for new AI-optimised HPC systems and the establishment of AI Factories.

As AI technologies and methodologies advance swiftly, Europe needs to actively develop and strengthen the ecosystem of frameworks, environments, tools and services required. Although this strategic effort is aimed at research and innovation, it also extends to public administration and industry.

With five other countries, Sigma2 represents Norway in the LUMI-AI consortium led by CSC (IT Center for Science in Finland. Together with the EuroHPC Joint Undertaking, the LUMI-AI consortium will make a major investment in the LUMI AI optimised HPC system in Kajaani, which will replace the current LUMI system and additionally include a quantum computing accelerator partition, LUMI-IQ.

Equally important is the formation of the LUMI AI Factory. This is a three-year project centred around AI-optimised HPC systems for supporting and advancing AI usage. Each member country of the LUMI AI consortium will have a national AI factory, coming together and collaborating around the central LUMI AI Factory.

In 2024 the LUMI-Q system in Czhecia, where Norway is participating with Sigma2, Simula and SINTEF, finally started construction. It is now expected to become available for researchers in mid-2025.

The EuroHPC National Competence Centres, now in its second phase, and with the Norwegian Competence Centre for HPC headed by Sigma2, has continued to support Norwegian industry and public administration in groundbreaking projects utilising HPC and AI.

Norway is on the EuroHPC JU’s Governing Board represented by the Research Council, and Sigma2 supports the Research Council as an adviser. As the national e-infrastructure provider, we are responsible for implementing Norwegian participation in EuroHPC. This arrangement has been working very well, largely thanks to the excellent collaboration with the Research Council and Sigma2’s experience through participating in PRACE and other European projects for many years.

The European Open Science Cloud (EOSC)

The European Open Science Cloud (EOSC) aims to create a FAIR data and service network for European research. It unites existing infrastructures in a federated system, allowing researchers to share, find, and reuse data, tools, and services more effectively.

EOSC — 2024 milestones

Throughout 2024, EOSC continued to evolve its vision of creating a federated and open environment for European research and innovation.

One highlight was the EOSC Symposium 2024. This event brought together a diverse range of stakeholders, including policymakers, funders, research institutions, and data infrastructures, to discuss the future direction of EOSC post-2027. Key topics included the integration of European Common Data Spaces and the expansion of the EOSC Federation.

Another major milestone in 2024 was the release of the EOSC Federation Handbook, a comprehensive guide to the governance and operational tasks within the EOSC Federation. This document is crucial in formalising the EOSC Federation's operations and ensuring continued collaboration and interoperability across European research infrastructures. Sigma2 played a key role in the development of the handbook.

These efforts in 2024 highlight EOSC's ongoing commitment to enabling open science, enhancing data access, and supporting collaborative research across Europe, positioning Europe as a global leader in research data management.

As a key partner in various EOSC-related initiatives, we further our contributions by participating in the EOSC-ENTRUST project to create an interoperability framework for sensitive data services.

EOSC — Entrust

EOSC-ENTRUST, launched in 2024 as part of the European Open Science Cloud (EOSC) initiative, builds on work by Sigma2 and partners like ELIXIR Norway, UiO, UiB, and NTNU, and several international collaborators.

Funded through the INFRA-EOSC call, the project aims to create a federated network of sensitive data services within EOSC. Led by ELIXIR and EUDAT CDI, EOSC-ENTRUST will design and demonstrate an interoperability layer to support this federation across Europe.

The goal is to enable seamless access to and analysis of sensitive data across various sectors by developing a robust, interoperable framework. Sigma2 and EUDAT partners are exploring the sustainability of this model with the involved universities. This builds on national efforts around Trusted Research Environments (TRE), leveraging UiO's Sensitive Data Services (TSD) and extending collaboration to UiB and NTNU.

In 2024, EOSC-ENTRUST focused on several key activities:

- Project Initiation and Kick-off: The project officially commenced in early 2024, with the first partner meeting held in March to align the project’s objectives and strategies.

- Interoperability Framework Development: A major aspect of EOSC-ENTRUST's work in 2024 was developing a federated data access and analysis framework. This work addresses legal, organisational, technical, and semantic challenges to ensure seamless interoperability between sensitive data services, across Europe.

- Engagement with Driver Projects: To test and inform the framework, EOSC-ENTRUST identified several driver projects across diverse fields such as genomics, clinical trials, and social sciences. These projects serve as models to demonstrate how federated workflows can handle sensitive data securely.

- Collaboration with European Initiatives: EOSC-ENTRUST collaborated with other EU-funded projects, including SIESTA and TITAN, to enhance the European data ecosystem and integrate new trusted environments for managing sensitive data.

- Sustainability and Policy Development: As part of the long-term vision, the project worked on creating sustainability models for the network and developed policy guidelines to ensure the operational continuity of the Trusted Research Environments within EOSC.

By the end of 2024, EOSC-ENTRUST had established the foundation for an interoperable, federated ecosystem of sensitive data services, streamlining access and enabling secure data analysis across Europe. This effort supports the broader goal of making sensitive data more accessible and usable for researchers while maintaining high standards of security and privacy.

Nordic e-Infrastructure Collaboration (NeIC)

Sigma2 is a partner in NeIC – the Nordic e-Infrastructure Collaboration – which unites the national e-infrastructure providers from the Nordic countries and Estonia. We participate in several collaboration projects under the NeIC umbrella.

NeIC: Development of best-in-class e-Infrastructure services beyond national capabilities

2024 has been a transitional period for NeIC as it redefines its structure and operations in preparation for the new phase of its collaboration starting in 2025. With the expiration of the current Memorandum of Understanding (MoU) at the end of 2024, NeIC partners, including the Nordic e-infrastructure providers and Estonia, have worked to finalise a new governance and financial model, ensuring a sustainable path forward for Nordic collaboration on digital infrastructures.

Strategic Re-orientation for 2025 and beyond

The focus will be maintaining a strong Nordic presence in the European e-infrastructure landscape while fostering collaboration in research and other sectors such as health data and public administration.

Governance and Collaboration Agreement

The governance model has been revised by establishing the Strategy Committee, composed of representatives from the national e-infrastructure providers. The new model will empower the Strategy Committee to make strategic decisions, oversee budget allocations, and guide the implementation of the organisation’s strategy. A new collaboration agreement reflecting these changes will guide the operation of NeIC from 2025 onward.

Key Strategic Objectives

Three highlighted critical strategic priorities for NeIC in 2025:

- Securing New Funding: Establishing a portfolio of new Nordic-funded projects and obtaining EU funding as NeIC itself.

- Sustaining Existing Projects: Ensuring the success of ongoing projects like Puhuri3 and Federated Resource Management (FRM).

- Connecting Competencies: Fostering collaboration and knowledge exchange among national providers to build a robust e-infrastructure ecosystem.

Financial Outlook and Budget for 2025

The financial landscape remains uncertain as national funding agencies finalise their commitments for 2025. A draft budget has been proposed, with a significant focus on the sustainability of key projects. Partner contributions, which include membership fees and external funding, will provide the necessary resources to continue operations. The new budget will also accommodate potential changes in funding levels and future collaborations.

CodeRefinery 3

CodeRefinery is a project under NeIC providing researchers with training in the necessary tools and techniques to create sustainable, modular, reusable, and reproducible software. It acts as a hub for FAIR (Findable, Accessible, Interoperable, and Reusable) software practices.

In 2024, CodeRefinery continued its mission to enhance research software development skills across the Nordic region. The initiative offers free online courses and materials, focusing on tools often overlooked in academic education, to promote reusability, reproducibility, and openness in research software.

Workshops and Training Sessions

Throughout 2024, CodeRefinery conducted several workshops aimed at improving research software practices. Participants reported significant improvements in their coding practices, including better use of version control systems like Git, enhanced code documentation, and increased confidence in collaborative coding.

Thus, the initiative delivers its goal by fostering a community of researchers committed to sustainable and reproducible research software practices.

Puhuri

Puhuri, another NeIC project, is a cloud service that helps HPC centres, supercomputers such as the EuroHPC LUMI supercomputer, and data centres efficiently manage and bill for compute resources, making high-performance computing more accessible for researchers and industrial users across Europe.

The Puhuri project is divided into multiple phases referred to as Puhuri1, Puhuri2, and Puhuri3. Each phase builds upon the previous one, aiming to enhance and expand the capabilities and reach of the Puhuri cloud service.

In 2024, the Puhuri2 project continued to enhance cross-border access to HPC resources for Nordic researchers and focused on ensuring sustainability and expanding the system's functionalities.

Plans for Puhuri3 in 2025

Puhuri3 is scheduled to commence in May 2025, with plans to introduce new features and further improvements to the Puhuri system. Puhuri3 aims to address emerging challenges, meet the evolving needs of researchers in the region, and contribute significantly to scientific research advancing in the Nordic region.

Nordic Tier-1

The Nordic Tier-1 (NT1) is a collaborative initiative under the NeIC umbrella. Here, the four Nordic CERN partners collaborate through NeIC and operate a high-quality and sustainable Nordic Tier-1 service supporting the CERN Large Hadron Collider (LHC) research programme.

It is an outstanding example of promoting excellence in research in the Nordic Region and globally, and it is the only distributed Tier-1 site in the world. There are 13 Tier-1 facilities in the Worldwide LHC Computing Grid (WLCG), and their purpose is to store and process the data from CERN.

The Nordic Tier-1 Grid Facility has been ranked among the top three Tier-1 sites according to the availability and reliability metrics for the ATLAS and ALICE Tier-1 sites. NT1 continues to serve the LHC's vast data storage and processing needs, contributing to key experiments, particularly ATLAS and ALICE, vital to advancing our understanding of fundamental physics. In the past year, the Nordic Tier-1 facility has maintained its position among the top three Tier-1 sites in terms of availability and reliability, consistently meeting the high demands of the LHC’s extensive data processing requirements.

The combined efforts of the Nordic nations have led to an even more robust and efficient operation. By synchronising national contributions, NT1 has expanded its capacity to support large-scale storage and computing requirements, yielding significant cost savings while enhancing scientific outcomes.

The continued pooling of resources, expertise, and infrastructure further strengthens the Nordic contribution to global research efforts, ensuring that the region remains at the forefront of scientific discovery and technological innovation. By combining the independent national contributions through synchronised operation, the total Nordic contribution reaches a critical mass with higher impact, saves costs, pools competencies and enables more beneficial scientific returns by better serving large-scale storage and computing needs.

NeIC NordiQuest Project

The Nordic-Estonian Quantum Computing e-Infrastructure Quest (NordiQuEst) made significant strides in integrating quantum computing with HPC across the Nordic region.

This collaborative initiative led by NeIC, involves partners from Denmark, Estonia, Finland, Norway, and Sweden, including institutions such as Chalmers University of Technology, CSC – IT Center for Science, DTU Technical University of Denmark, SINTEF, Simula Research Laboratory, University of Tartu, and VTT Technical Research Centre of Finland.

Key Developments in 2024

Integration with supercomputer LUMI: NordiQuEst successfully integrated quantum computing capabilities with the pre-exascale LUMI supercomputer, enhancing the Nordic HPC infrastructure by adding a quantum computing environment. This integration provides users with a versatile, unified platform comprising several Nordic quantum computers and simulators, facilitating the adoption of advanced quantum computing technologies across various research communities.

Educational Initiatives: The project organised a "Quantum Computing 101" workshop at the NeIC 2024 Conference, offering participants hands-on experience with quantum programming, including submitting quantum jobs to real quantum computers and exploring hybrid quantum-classical algorithms.

Research and Publications: NordiQuEst has contributed to the academic community by publishing a comprehensive paper detailing the project's objectives, infrastructure, and challenges. The paper outlines the integration of HPC and quantum computing systems, addressing technical, operational, and conceptual challenges, and serves as a foundational resource for future developments in hybrid computing infrastructures.

9. How we work: sustainability, organisation, and governance

Sustainability

Our company's strategies and plans state that we should sustainably run our organisation, as a purchaser, employer, and company. Several of our projects work directly towards the UN's sustainable development goals.

We are thus continuously committed to sustainable procurements, implementing eco-friendly solutions for hardware, enhancing energy efficiency, and providing researchers with essential resources for advancing climate research.

In 2024, our dedication to sustainability has been demonstrated through several key initiatives. We are discontinuing the previous generation NIRD at UiT and transitioning from regular production at NTNU.

To maximise resource efficiency, disks and controllers from the previous storage system will be repurposed. Additionally, we have published a Disclosure under the Transparency Act, reinforcing our dedication to transparency and responsible practices.

In 2024, we launched a webpage presenting our work on sustainability for those who are interested to know more.

For researchers using national e-infrastructure services, emission accounting involves tracking the carbon footprint of supercomputing usage, data storage, and other digital services. This can help in sustainability efforts, optimising energy consumption, and meeting environmental regulations or goals.

In 2025, we will further develop the emissions accounting for researchers using the national services.

Our organisation

The Norwegian research infrastructure services (NRIS) is a collaboration between Sigma2 and the universities of Bergen (UiB), Oslo (UiO), Tromsø (UiT The Arctic University of Norway) and NTNU.

Together we provide the national supercomputing and data storage services. The services are operated by NRIS and coordinated and managed by Sigma2.

The people behind the Norwegian research infrastructure services.

Overview of NRIS and the teams

NRIS is a geographically distributed network of expertise comprising Sigma2 employees and IT specialists from partner universities. This network ensures that researchers can quickly and easily access domain-specific support.

At the end of 2024, NRIS accounted for 53 full-time equivalents.

Each team within NRIS has its unique responsibilities:

- The GPU Team assists researchers with GPU-accelerating and porting applications.

- The Infra Team operates and ensures the availability of our services.

- The Service Platform Team is in charge of operating the service platform, which is a Kubernetes cluster.

- The Software Team is responsible for installing and maintaining the scientific software and software installation environments on NRIS clusters.

- The Support Team manages daily support, prioritising and escalating tickets and answering user requests.

- The Supporting and Monitoring Services (SMS) Team supports and monitors our internal systems.

- The Training and Outreach Team offers free training events for our users. The topic of the training is diverse.

Sigma2 oversees and holds strategic responsibility for services, including product management and development, Norway's involvement in international e-infrastructure collaborations, and resource allocation administration.

The Research Council of Norway and the university partners (University of Bergen, Oslo, Tromsø, and NTNU) jointly finance our activities.

At the end of 2024, Sigma2 had 17 employees, 24% women.

In 2025, we will continue to strive for diversity and gender balance and work to promote equality in our organisation, committees, and the Board of Directors.

Our Governance and Board of Directors

Our board is led by the Director of Sikt’s Data and Infrastructure Division. The six other members represent our four consortium partners. Among them are two external representatives from abroad and a director general from a national research institute.

Board members in 2024

- Tom Røtting (Chair), Director for the Data and Infrastructure Division, Sikt

- Solveig Kristensen, Dean, The Faculty of Mathematics and Natural Sciences, UiO

- Ingela Nystrøm, Professor, Uppsala University

- Ingelin Steinsland, Vice Dean of Research, Faculty of Information Technology and Electrical Engineering, NTNU

- Gottfried Greve, Vice-Rector for Innovation, Projects and Knowledge Clusters, UiB

- Kenneth Ruud, Director General, Norwegian Defence Research Establishment (FFI)

- Arne Smalås, Dean, Faculty of Science and Technology, UiT